前言

这个问题其实是今年3月份的时候发生的,最近整理文件看到之前留下的一些文件,故重新做个记录。

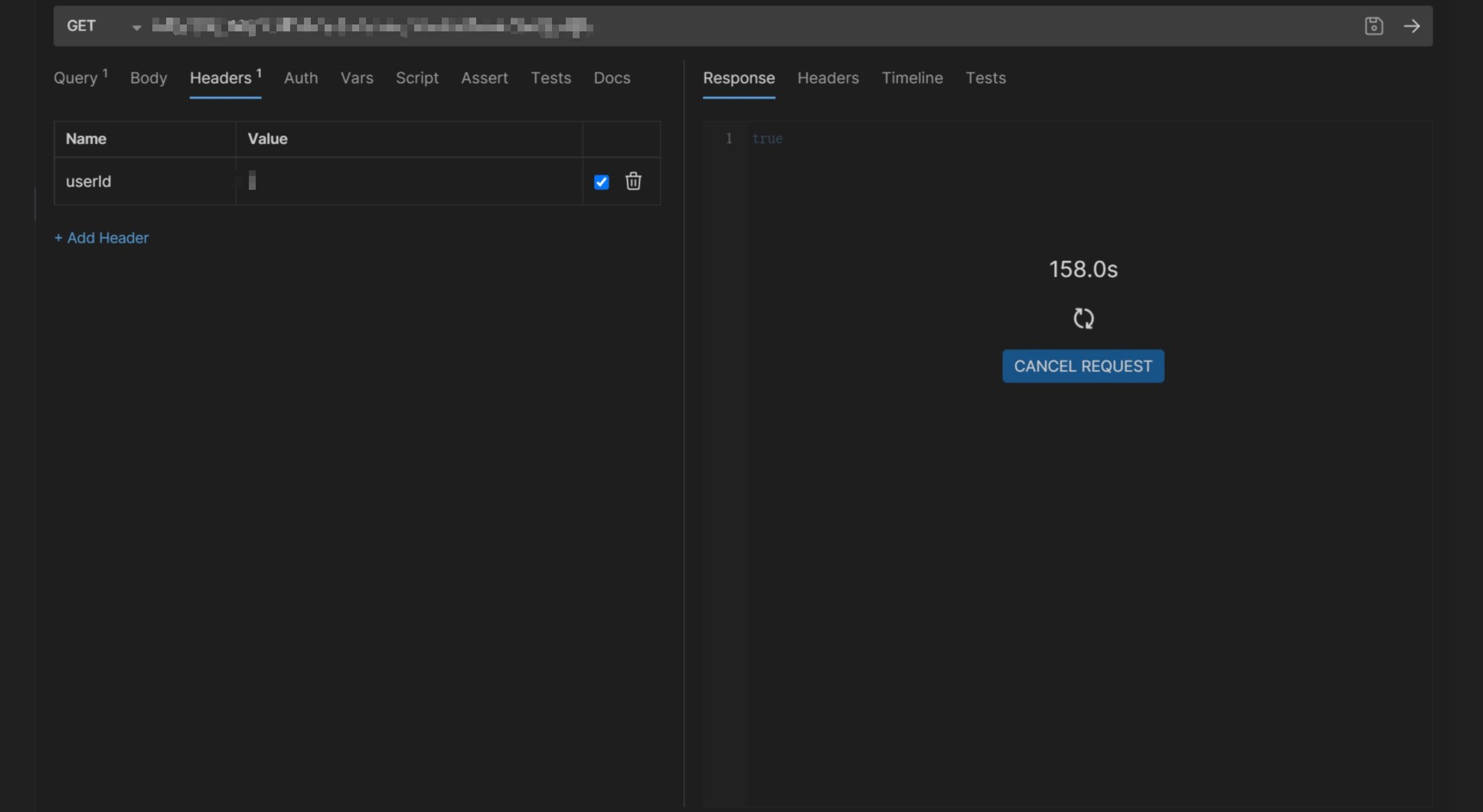

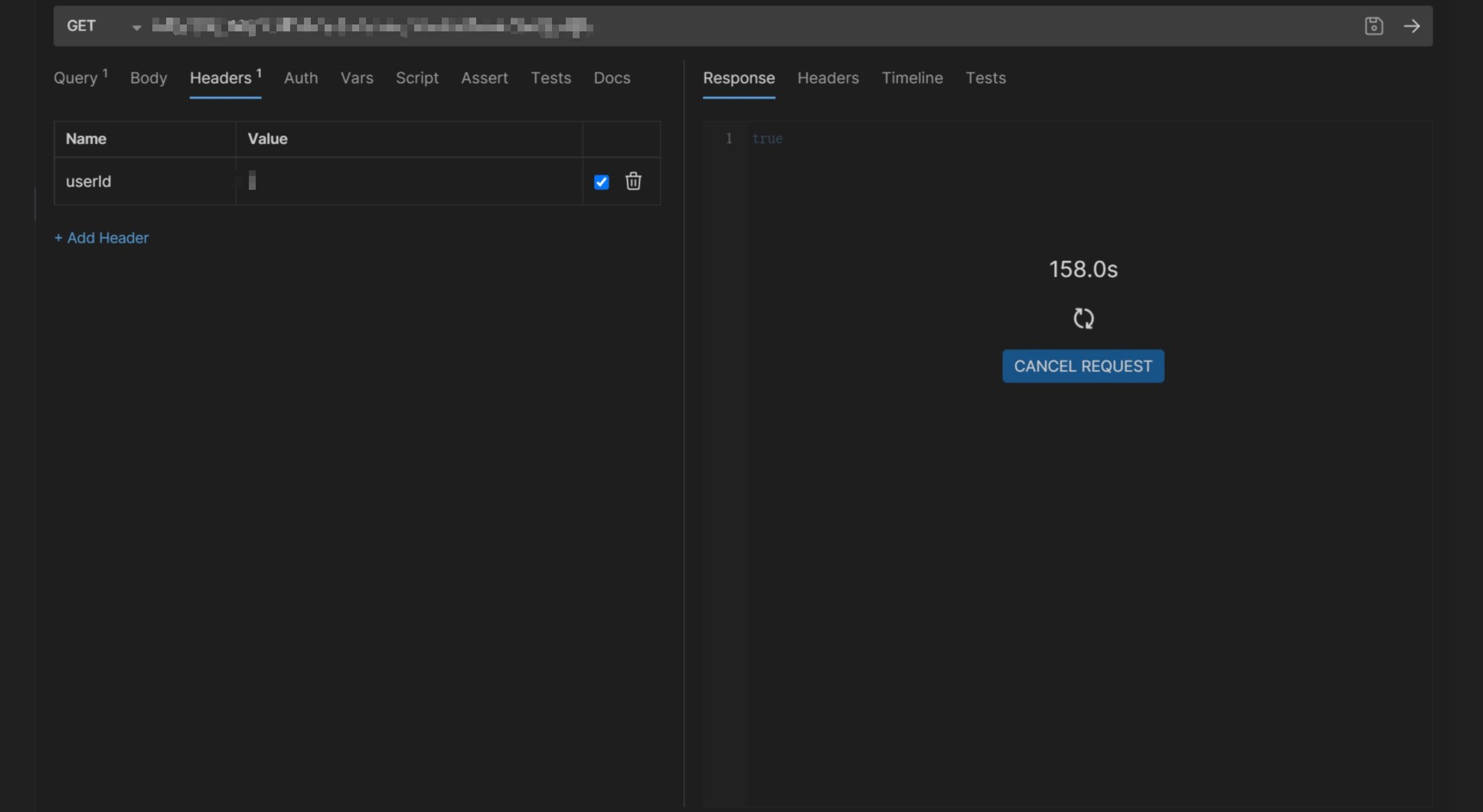

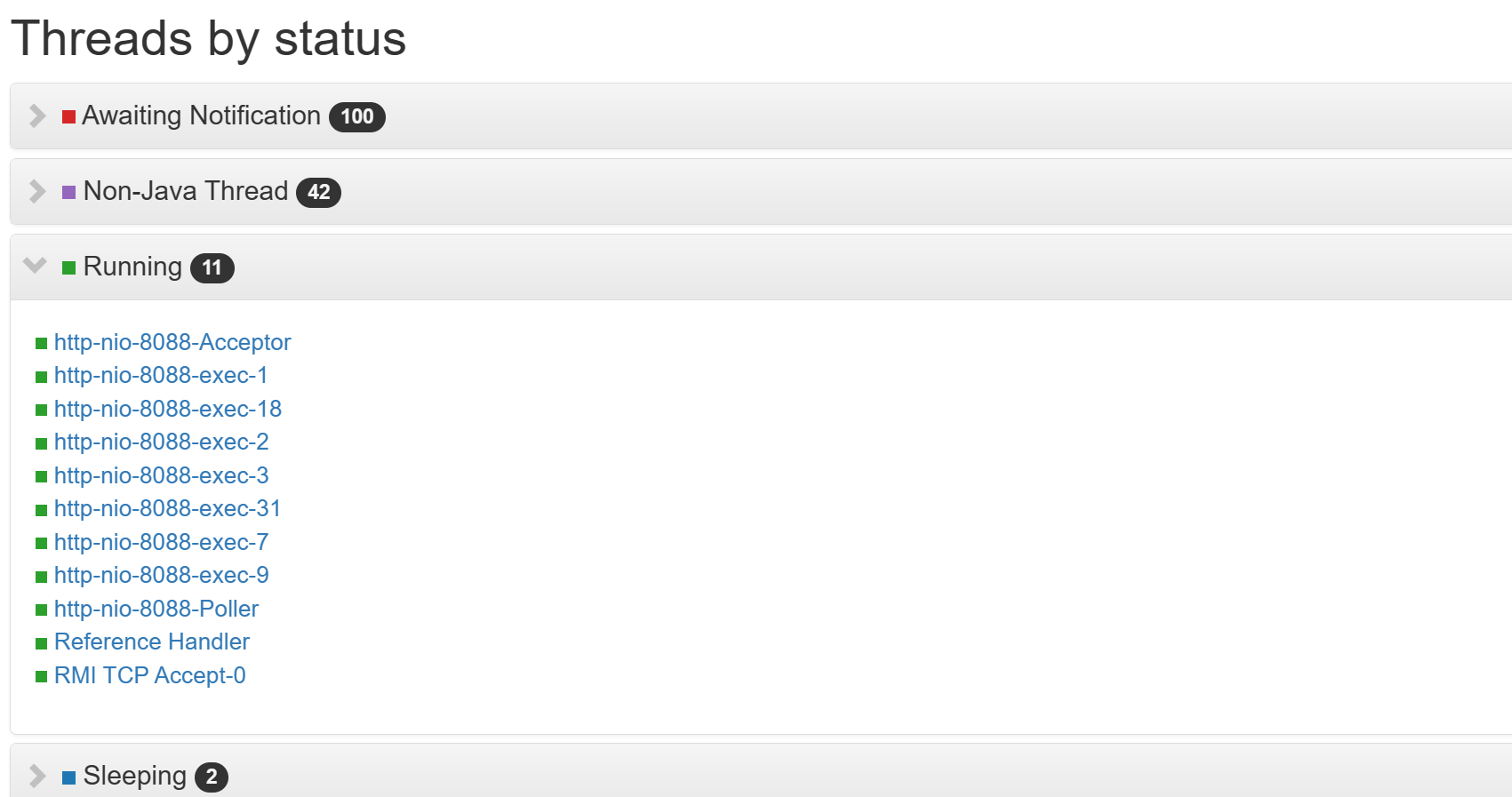

原因是公司某个服务遇到了一个很奇怪的问题,表现为服务进入假死状态,必须要重启,否则所有请求都会被挂起,表现如下图:

而且这个时候服务什么日志都没有打印。该服务运行几年了,之前也没有遇到过这种情况。

问题排查

对于这个情况第一时间想到的就是服务出现死锁了,导致服务假死了,所以当再次出现时,使用jstack把线程的信息打印出来进行分析,由于是docker部署的,为了不影响使用先把日志导出到一个文件,再从docker容器复制出来。

jstack -l PID > thread_stack_xxx.log

docker cp containerID:/home/thread_stack_xxx.log ~/

从日志看,能看到一些线程WAITING了(排除掉tomcat启动创建的核心线程),阻塞到takeLast方法中,部分日志如下:

"http-nio-8088-exec-1" #38 daemon prio=5 os_prio=0 cpu=0.00ms elapsed=29.93s tid=0x0000027a059aacc0 nid=0x731c waiting on condition [0x000000a0981fc000]

java.lang.Thread.State: WAITING (parking)

at jdk.internal.misc.Unsafe.park([email protected]/Native Method)

- parking to wait for <0x0000000402501a20> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.park([email protected]/LockSupport.java:341)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionNode.block([email protected]/AbstractQueuedSynchronizer.java:506)

at java.util.concurrent.ForkJoinPool.unmanagedBlock([email protected]/ForkJoinPool.java:3465)

at java.util.concurrent.ForkJoinPool.managedBlock([email protected]/ForkJoinPool.java:3436)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await([email protected]/AbstractQueuedSynchronizer.java:1623)

at com.alibaba.druid.pool.DruidDataSource.takeLast(DruidDataSource.java:2283)

at com.alibaba.druid.pool.DruidDataSource.getConnectionInternal(DruidDataSource.java:1755)

at com.alibaba.druid.pool.DruidDataSource.getConnectionDirect(DruidDataSource.java:1470)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_connect(FilterChainImpl.java:5058)

at com.alibaba.druid.filter.FilterAdapter.dataSource_getConnection(FilterAdapter.java:2759)

at com.alibaba.druid.filter.FilterChainImpl.dataSource_connect(FilterChainImpl.java:5054)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1448)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:1440)

at com.alibaba.druid.pool.DruidDataSource.getConnection(DruidDataSource.java:83)

at org.springframework.jdbc.datasource.DataSourceTransactionManager.doBegin(DataSourceTransactionManager.java:265)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.startTransaction(AbstractPlatformTransactionManager.java:400)

at org.springframework.transaction.support.AbstractPlatformTransactionManager.getTransaction(AbstractPlatformTransactionManager.java:373)

at org.springframework.transaction.interceptor.TransactionAspectSupport.createTransactionIfNecessary(TransactionAspectSupport.java:595)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:382)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:119)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:763)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:708)

at org.lgq.transactiontest.service.impl.AccountServiceImpl$$EnhancerBySpringCGLIB$$36755aa4.updateName(<generated>)

at org.lgq.transactiontest.controller.AccountSafeController.updateQuestion(AccountSafeController.java:25)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0([email protected]/Native Method)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke([email protected]/NativeMethodAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke([email protected]/DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke([email protected]/Method.java:568)

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:205)

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:150)

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:117)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:895)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:808)

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:87)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:1072)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:965)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:1006)

at org.springframework.web.servlet.FrameworkServlet.doGet(FrameworkServlet.java:898)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:502)

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:883)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:596)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:209)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:153)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:53)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:178)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:153)

at org.springframework.web.filter.RequestContextFilter.doFilterInternal(RequestContextFilter.java:100)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:117)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:178)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:153)

at org.springframework.web.filter.FormContentFilter.doFilterInternal(FormContentFilter.java:93)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:117)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:178)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:153)

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:201)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:117)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:178)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:153)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:167)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:90)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:492)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:130)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:93)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:74)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:343)

at org.apache.coyote.http11.Http11Processor.service(Http11Processor.java:389)

at org.apache.coyote.AbstractProcessorLight.process(AbstractProcessorLight.java:63)

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:926)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1791)

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49)

- locked <0x0000000440873d30> (a org.apache.tomcat.util.net.NioEndpoint$NioSocketWrapper)

at org.apache.tomcat.util.threads.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1191)

at org.apache.tomcat.util.threads.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:659)

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61)

at java.lang.Thread.run([email protected]/Thread.java:840)

Locked ownable synchronizers:

- <0x000000040485f520> (a org.apache.tomcat.util.threads.ThreadPoolExecutor$Worker)

....

Druid源码分析

从上面日志知道是Druid的连接池队列阻塞等待了,定位到源码com.alibaba.druid.pool.DruidDataSource#takeLast,不过有点奇怪,不应该进入takeLast的,因为这个方法名,如果从阻塞队列的实现看的话,是没有超时时间的,但是配置文件明明是有配置最大等待时间的。

先进源码分析看看:

DruidConnectionHolder takeLast() throws InterruptedException, SQLException {

try {

// 循环判断连接池连接数是否空了

while (poolingCount == 0) {

// 发送创建连接的信号

// 创建成功后,唤醒后再次循环进来 poolingCount 就不等于0了

emptySignal(); // send signal to CreateThread create connection

if (failFast && isFailContinuous()) {

throw new DataSourceNotAvailableException(createError);

}

// 等待的线程数+1,不为空,CreateConnectionThread需要用于判断

notEmptyWaitThreadCount++;

if (notEmptyWaitThreadCount > notEmptyWaitThreadPeak) {

notEmptyWaitThreadPeak = notEmptyWaitThreadCount;

}

try {

// 连接数用完了,进入等待,有连接可用时被唤醒

notEmpty.await();

} finally {

notEmptyWaitThreadCount--;

}

notEmptyWaitCount++;

if (!enable) {

connectErrorCountUpdater.incrementAndGet(this);

if (disableException != null) {

throw disableException;

}

throw new DataSourceDisableException();

}

}

} catch (InterruptedException ie) {

notEmpty.signal(); // propagate to non-interrupted thread

notEmptySignalCount++;

throw ie;

}

decrementPoolingCount();

DruidConnectionHolder last = connections[poolingCount];

connections[poolingCount] = null;

return last;

}

发送连接池空信号方法:

private void emptySignal() {

// 这里的 createScheduler 等于 null,并没有使用到

if (createScheduler == null) {

// 唤醒创建连接的线程

// 该线程在 com.alibaba.druid.pool.DruidDataSource#init 方法中创建

// 创建方法:createAndStartCreatorThread()

empty.signal();

return;

}

// 创建任务数 大于等于 可创建连接任务的最大数

if (createTaskCount >= maxCreateTaskCount) {

return;

}

// 活跃连接数 + 可用连接池数 + 创建任务数 大于等于 最大活跃数

if (activeCount + poolingCount + createTaskCount >= maxActive) {

return;

}

// 提交任务到创建连接的调度线程池(这里没有用到)

submitCreateTask(false);

}

查看创建连接的类com.alibaba.druid.pool.DruidDataSource.CreateConnectionThread:

// com.alibaba.druid.pool.DruidDataSource.CreateConnectionThread

public class CreateConnectionThread extends Thread {

public CreateConnectionThread(String name) {

super(name);

// 守护线程

this.setDaemon(true);

}

public void run() {

initedLatch.countDown();

long lastDiscardCount = 0;

int errorCount = 0;

// 循环执行

for (; ; ) {

// addLast

try {

lock.lockInterruptibly();

} catch (InterruptedException e2) {

break;

}

long discardCount = DruidDataSource.this.discardCount;

boolean discardChanged = discardCount - lastDiscardCount > 0;

lastDiscardCount = discardCount;

try {

boolean emptyWait = true;

// 不进入这里,createError == null

if (createError != null

&& poolingCount == 0

&& !discardChanged) {

emptyWait = false;

}

if (emptyWait

&& asyncInit && createCount < initialSize) {

emptyWait = false;

}

// emptyWait为true,进入

if (emptyWait) {

// 必须存在线程等待,才创建连接

if (poolingCount >= notEmptyWaitThreadCount

&& (!(keepAlive && activeCount + poolingCount < minIdle))

&& !isFailContinuous()

) {

empty.await();

}

// 防止创建超过maxActive数量的连接,创建线程进入等待

if (activeCount + poolingCount >= maxActive) {

empty.await();

continue;

}

}

} catch (InterruptedException e) {

lastCreateError = e;

lastErrorTimeMillis = System.currentTimeMillis();

if ((!closing) && (!closed)) {

LOG.error("create connection Thread Interrupted, url: " + jdbcUrl, e);

}

break;

} finally {

lock.unlock();

}

PhysicalConnectionInfo connection = null;

try {

// 创建连接

connection = createPhysicalConnection();

} catch (SQLException e) {

LOG.error("create connection SQLException, url: " + jdbcUrl + ", errorCode " + e.getErrorCode()

+ ", state " + e.getSQLState(), e);

errorCount++;

if (errorCount > connectionErrorRetryAttempts && timeBetweenConnectErrorMillis > 0) {

// fail over retry attempts

setFailContinuous(true);

if (failFast) {

lock.lock();

try {

notEmpty.signalAll();

} finally {

lock.unlock();

}

}

if (breakAfterAcquireFailure) {

break;

}

try {

Thread.sleep(timeBetweenConnectErrorMillis);

} catch (InterruptedException interruptEx) {

break;

}

}

} catch (RuntimeException e) {

LOG.error("create connection RuntimeException", e);

setFailContinuous(true);

continue;

} catch (Error e) {

LOG.error("create connection Error", e);

setFailContinuous(true);

break;

}

if (connection == null) {

continue;

}

// 创建连接成功后,把连接放入连接池,同时会把 可用连接数 poolingCount + 1 和 唤醒等待的线程

boolean result = put(connection);

if (!result) {

JdbcUtils.close(connection.getPhysicalConnection());

LOG.info("put physical connection to pool failed.");

}

errorCount = 0; // reset errorCount

if (closing || closed) {

break;

}

}

}

}

通过上面的源码分析,可以知道因为 activeCount + poolingCount >= maxActive 而导致一直阻塞,线程没有被唤醒了。

统计下当前的Tomcat的线程数,一共102个;而处于WAITING的线程有100个,那就是活跃的就2个,然后排除掉Tomcat用于接收请求的Acceptor线程和分派请求的Poller线程,那实际就是线程都处于WAITING。

# 统计当前的tomca线程数

cat thread_stack_xxx.log | grep "http-nio" | wc -l

102

# 统计所有WAITING的线程

# grep -E "^\s+java.lang.Thread.State: WAITING" <jstack_log_file> | grep "http-nio" | wc -l

# 统计tomcat中当前 WAITING 的线程数

grep -A1 "http-nio" thread_stack_xxx.log | grep "java.lang.Thread.State: WAITING" | wc -l

100

线程统计分析的也可以使用https://jstack.review/ 或 https://fastthread.io/ 网站来分析thread的情况,例如下面:

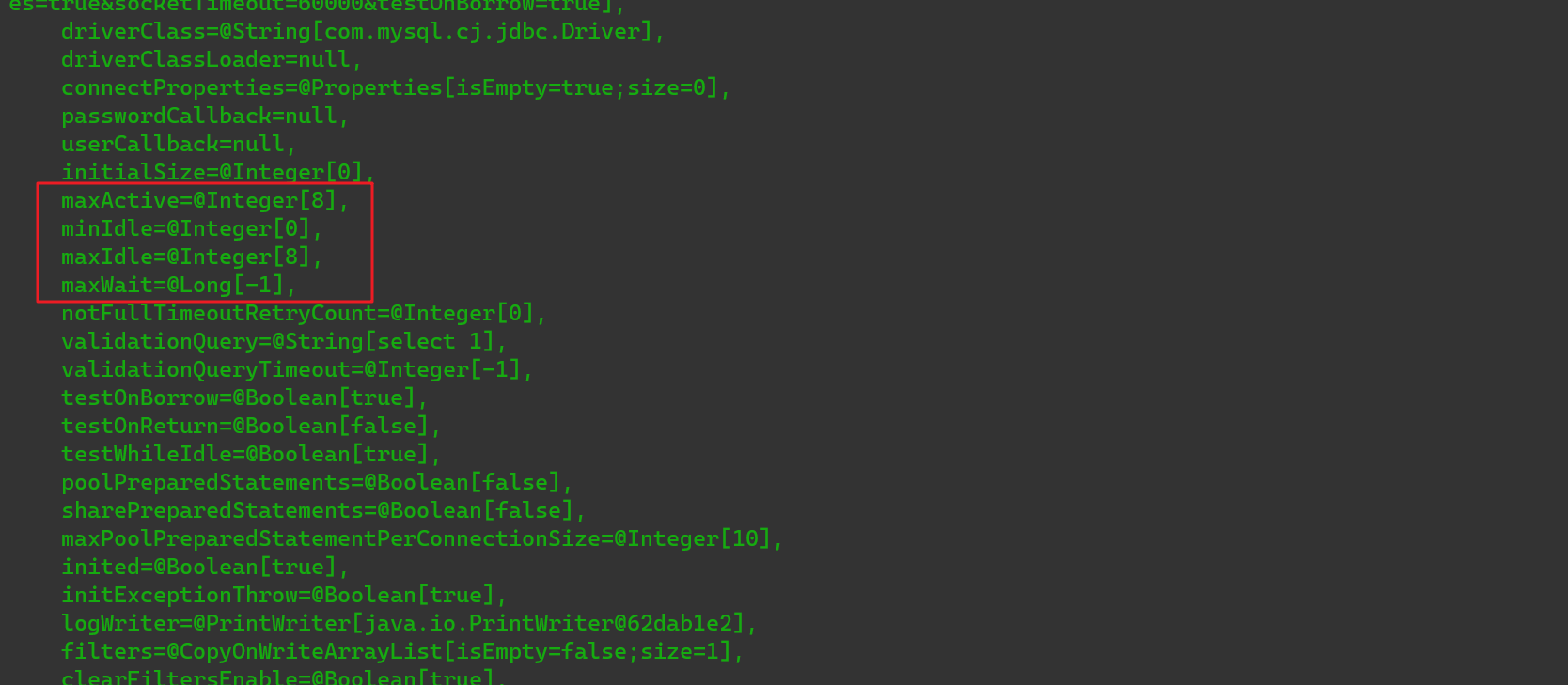

服务的Druid配置如下,配置max-active是100的,然后处于takeLast阻塞的有8个,还有个问题就是排队应该超时6s会报错的,但是却没有,到这就其实可以猜测是Druid的配置被修改了。

spring:

application:

name: transaction-test

datasource:

druid:

initial-size: 5

min-idle: 5

max-active: 100

max-wait: 60000

pool-prepared-statements: false

max-pool-prepared-statement-per-connection-size: 20

validation-query: SELECT 'x' FROM DUAL

validation-query-timeout: 30000

test-on-borrow: false

test-on-return: false

test-while-idle: true

time-between-eviction-runs-millis: 60000

min-evictable-idle-time-millis: 300000

max-evictable-idle-time-millis: 300000

filters: stat

stat-view-servlet:

enabled: true

url-pattern: /druid/*

reset-enable: true

login-username: xxxxx

login-password: xxxxx

获取线上环境Druid的配置

这里使用arthas线上环境查询spring bean中Druid的配置,运行arthas然后attach上服务进程。

# 用 tt 命令记录请求

tt -t org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter invokeHandlerMethod

访问随意一个接口,打印如下:

Press Q or Ctrl+C to abort.

Affect(class count: 1 , method count: 1) cost in 79 ms, listenerId: 1

INDEX TIMESTAMP COST(ms) IS-RET IS-EXP OBJECT CLASS METHOD

----------------------------------------------------------------------------------------------------------------------------------

1000 2023-03-30 13:40:3 41.1308 true false 0x73abf189 RequestMappingHandlerAdapter invokeHandlerMethod

5

打印出来的index是1000,通过tt命令的-i指令来指定index,用-w指令执行ognl表达式获取spring context。

tt -i 1000 -w 'target.getApplicationContext()'

执行指令后,打印了所有的bean对象出来,说明获取到成功,继续调用getBean获取具体的bean:

# 根据类型来获取数据库连接信息,也可以根据名称"xxxx"

tt -i 1000 -w 'target.getApplicationContext().getBean(@javax.sql.DataSource@class)'

很明显就和配置文件的不一致了,应该是被覆盖了。直接去服务工程源码搜一下,果然有个DruidConfig配置类,而且参数什么也没加,创建完就set了Filter后就返回了,这样的话DataSource的配置肯定是默认配置了。

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource() {

DruidDataSource druidDataSource = new DruidDataSource();

List<Filter> filterList = new ArrayList<>();

filterList.add(wallFilter());

druidDataSource.setProxyFilters(filterList);

return druidDataSource;

}

到这里就解释通了,为什么配置的maxWait没生效,因为默认值是 -1,是没有超时时间的,所以当时我看到线程栈信息调用takeLast()方法就觉得奇怪了,源码的判断如下:

// com.alibaba.druid.pool.DruidDataSource#getConnectionInternal

if (maxWait > 0) {

holder = pollLast(nanos);

} else {

holder = takeLast();

}

但是问题还没有解决,线程为什么阻塞了?

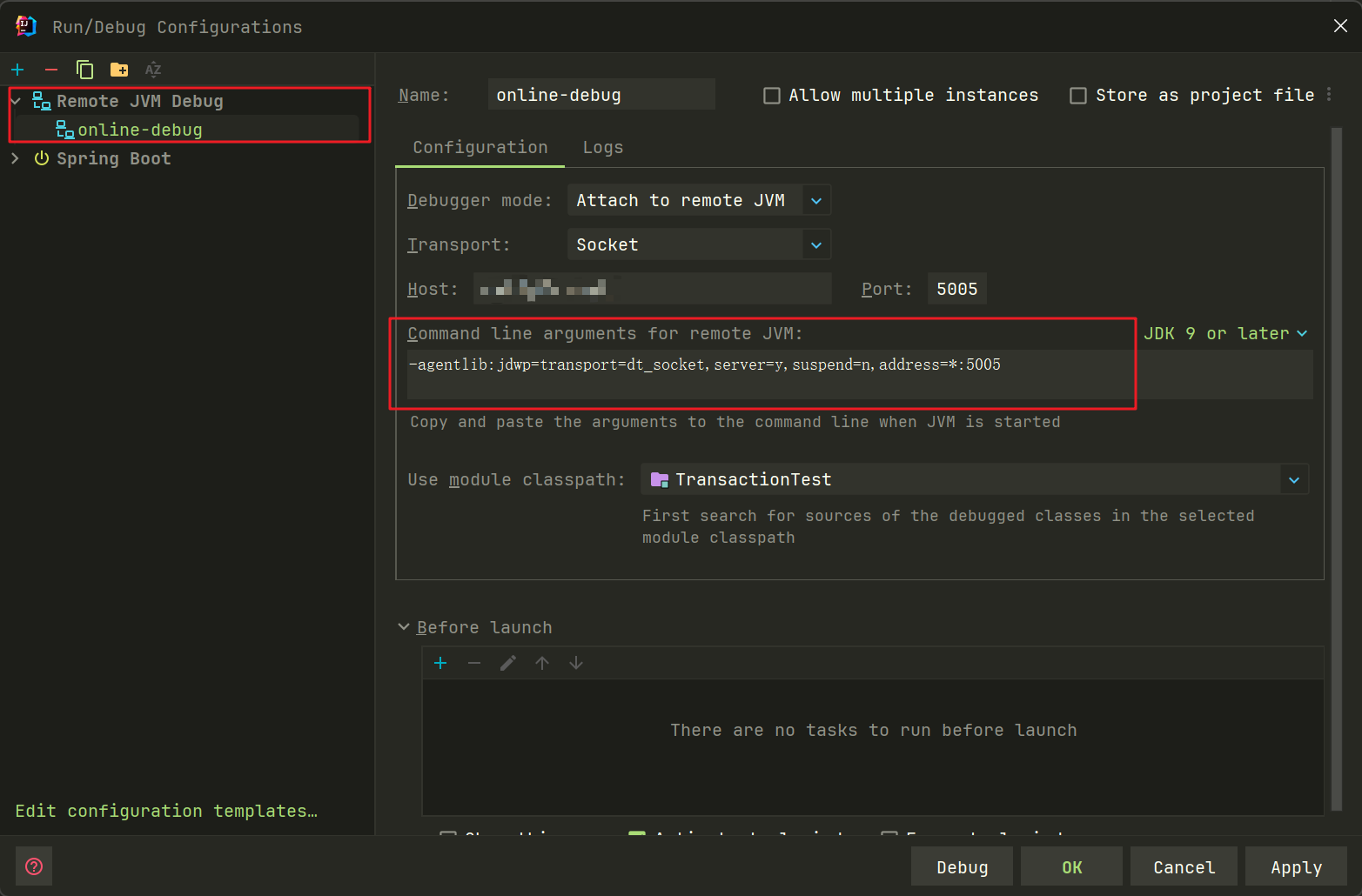

远程Debug定位问题

经过上面的排查,已经知道配置被覆盖的原因,导致配置没有生效,数据库连接会被快速消耗而导致新来的请求进入排队。

下面排查下业务代码导致阻塞的原因,直接在获取获取连接的源码处打断点定位原因。

注意,一般生产环境是不允许debug的,这里因为实在不知道是哪个接口造成的,所以使用远程Debug的方式,这样会比加日志然后再打包可以会更快定位到原因。

PS:这里也可以把日志级别调到debug,但是这样会打印大量日志,影响排查。

先修改生产环境docker-compose文件,添加-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=*:500启动参数,并且把调试端口暴露出来,当再次出现假死时,重启服务就可以远程debug了。

version: '3.9'

services:

xxx-test:

image: xxx-test:latest

hostname: xxx-test

privileged: true

ports:

- "8088:8088"

- "5005:5005" # 暴露调试端口

# 修改启动参数

entrypoint: ["java", "-jar", "-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=*:5005", "-Xms512m", "-Xmx512m", "/usr/local/TransactionTest-0.0.1-SNAPSHOT.jar"]

networks:

- bridge

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints: [node.labels.type == master]

labels: [type=basic]

networks:

bridge:

external: true

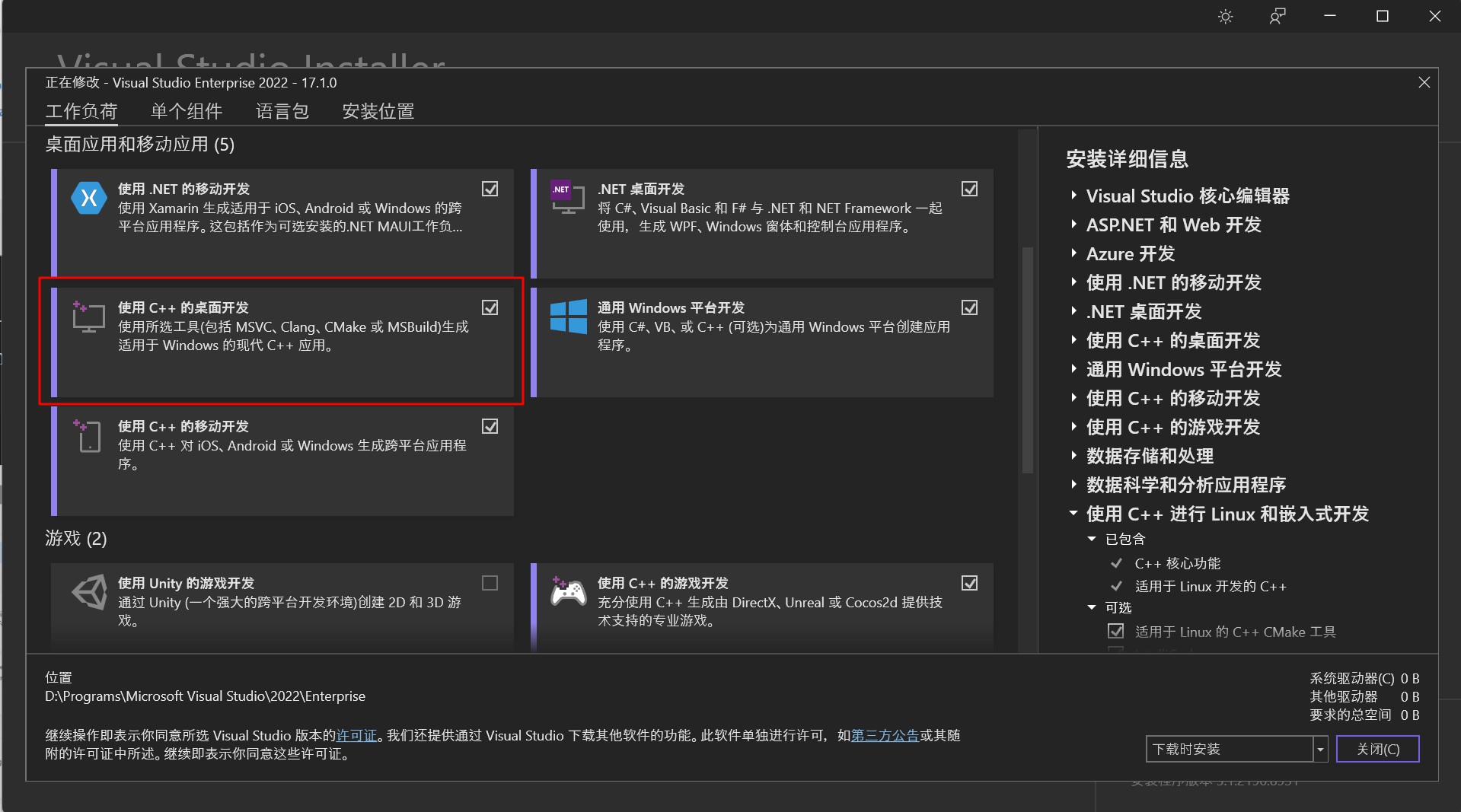

Idea添加远程debug配置-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=*:5005,如下图

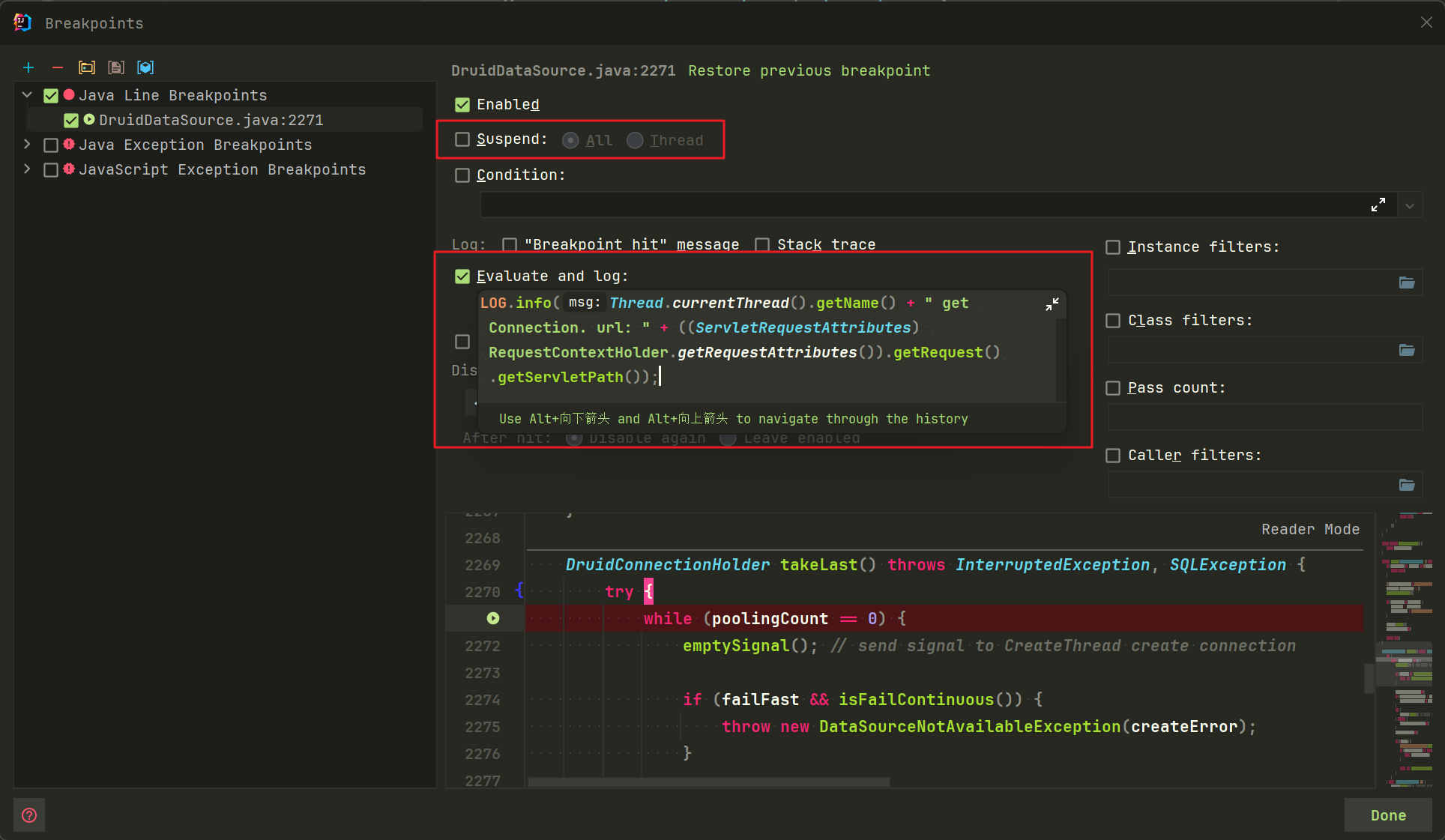

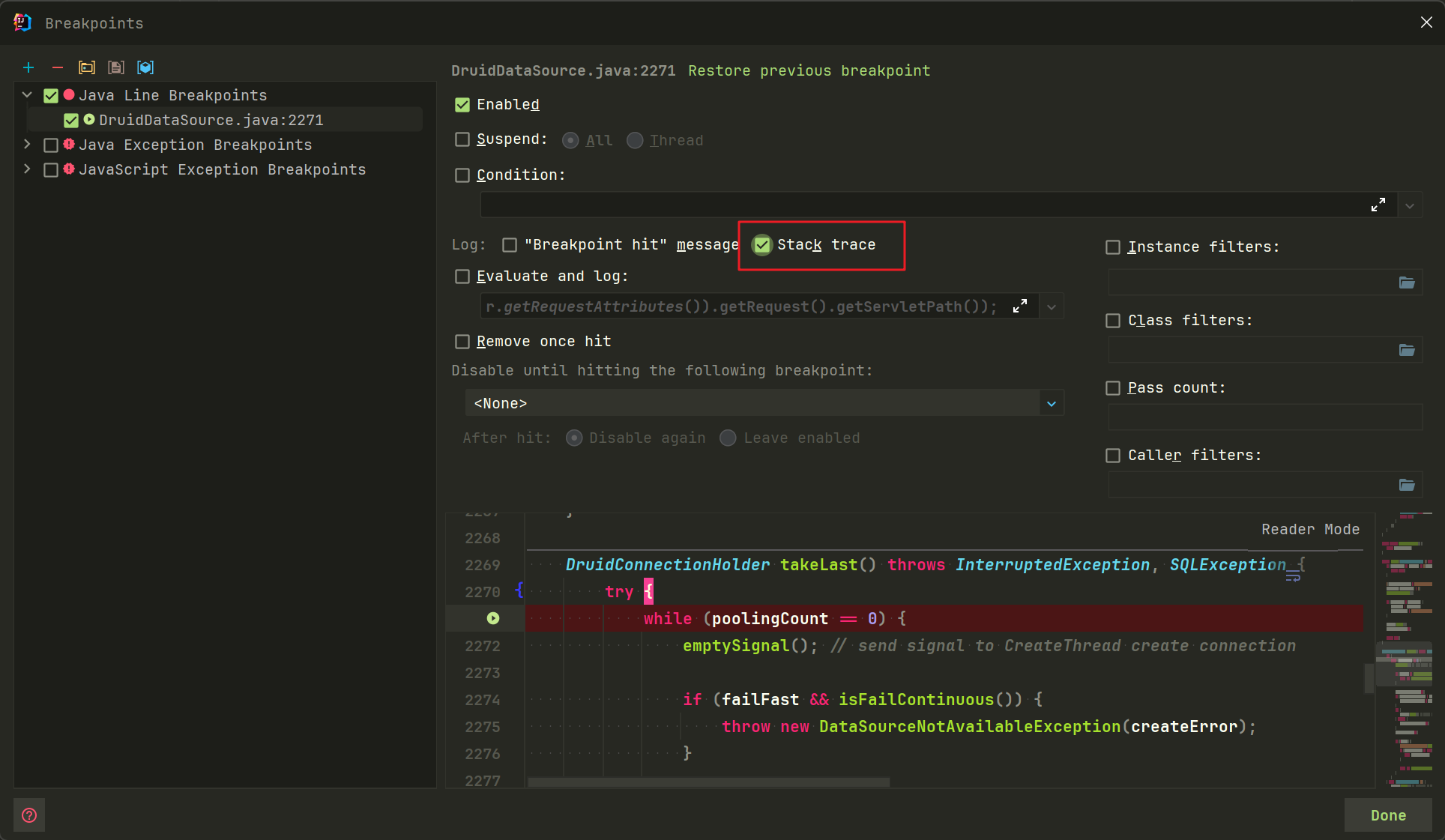

在源码com.alibaba.druid.pool.DruidDataSource#takeLast处打个断点,但是要注意,不能把程序挂起,这样会严重影响到生产环境。进入断点配置,把Suspend关闭,然后添加一些日志打印,这样方便找到假死时前的接口访问情况。

LOG.info(Thread.currentThread().getName() + " get Connection. url: " + ((ServletRequestAttributes) RequestContextHolder.getRequestAttributes()).getRequest().getServletPath());

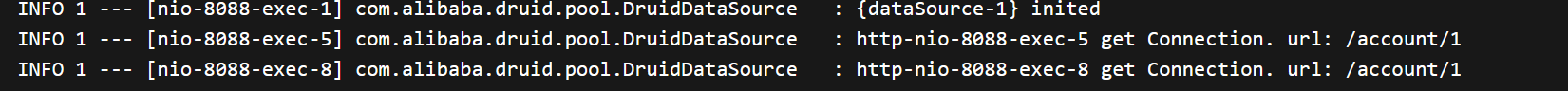

随意访问一个接口,可以看到打印出来了:

之后等再复现时,把日志文件复制出来和jstack也打印一份复制出来分析原因,然后关闭远程debug,这玩意挺影响性能的。

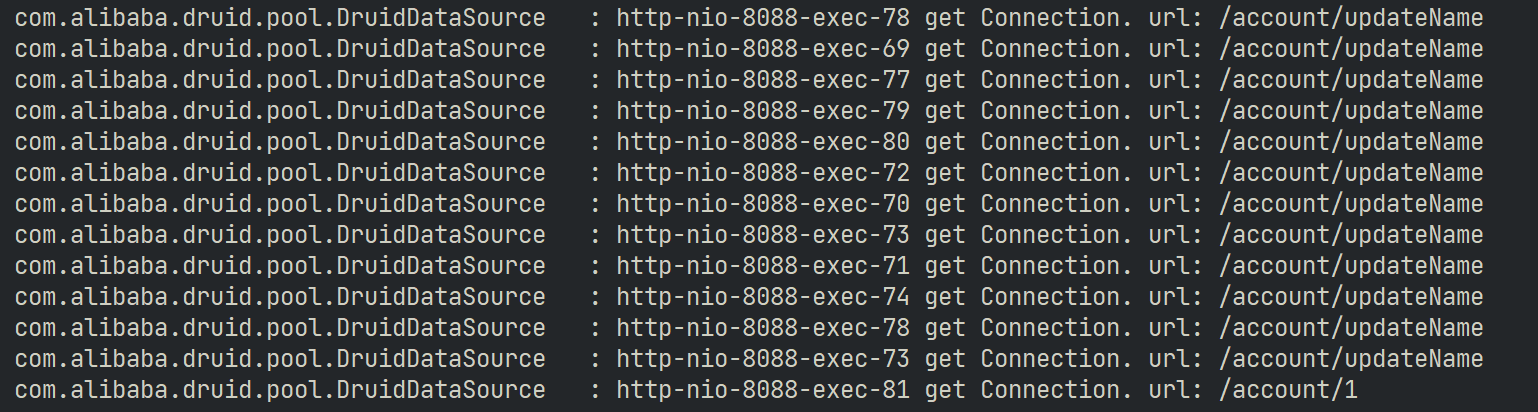

最后经过复现后的日志查看,定位到是/account/updateName这接口导致的,最后一个接口是手动调用的,已经被卡住了。

本地代码调试解决问题

上面经过排查,已经定位到出问题的接口了,下面可以本地调试解决问题了。

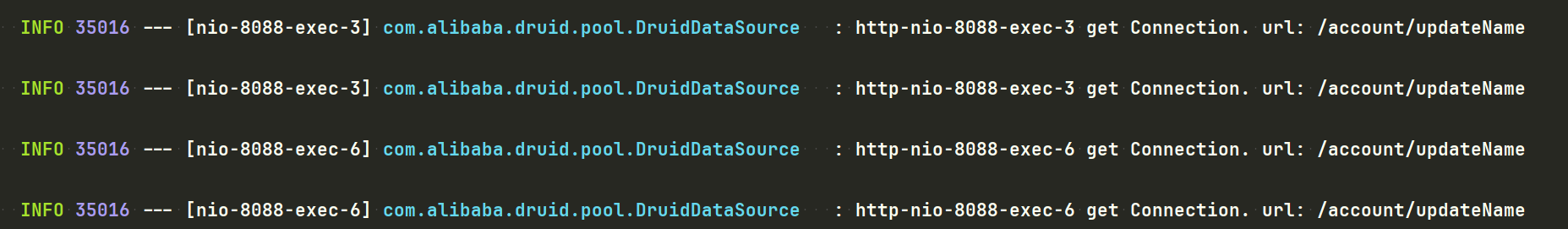

这个接口的逻辑其实很简单,就是更新数据库后还会查询一次数据库。依旧是打个断点在com.alibaba.druid.pool.DruidDataSource#takeLast,然后访问查看日志输出,每访问一次就获取2次连接,明显不对劲。

这里修改下断点配置,把调用栈打印出来。

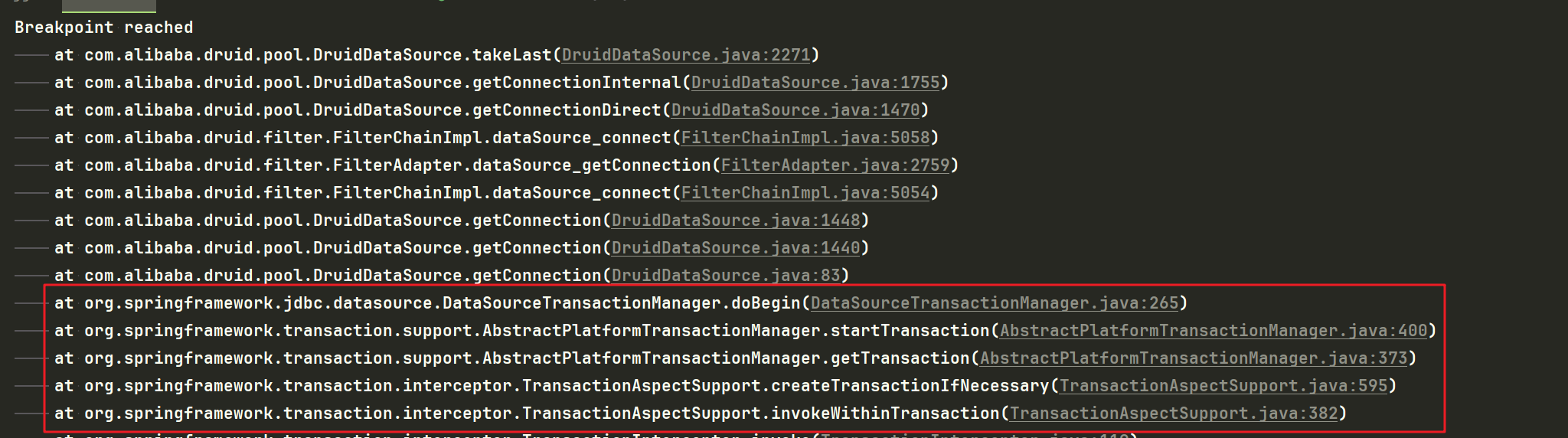

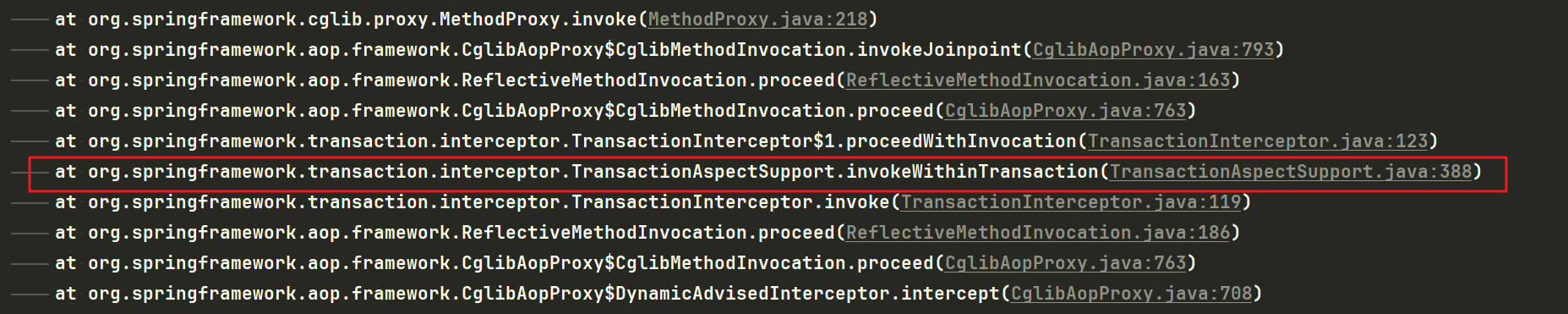

第一次获取连接的调用栈信息,开启了个事务,因为是个更新操作。

第二次获取连接时,调用时发现也有个事务,到这里,其实就已经大致知道原因了,应该是事务嵌套和事务传播的配置问题了。

跳到该方法第二次调用的service接口,好家伙,直接在这个service类上面加了@Transactional注解,传播级别是Propagation.NOT_SUPPORTED。

@Transactional(readOnly = true, propagation = Propagation.NOT_SUPPORTED)

看下源码对这个传播级别的解释:

/**

* 以非事务的方式执行,如果存在事务就把 当前事务挂起。

* Execute non-transactionally, suspend the current transaction if one exists.

* Analogous to EJB transaction attribute of the same name.

* <p><b>NOTE:</b> Actual transaction suspension will not work out-of-the-box

* on all transaction managers. This in particular applies to

* {@link org.springframework.transaction.jta.JtaTransactionManager},

* which requires the {@code javax.transaction.TransactionManager} to be

* made available to it (which is server-specific in standard Java EE).

* @see org.springframework.transaction.jta.JtaTransactionManager#setTransactionManager

*/

NOT_SUPPORTED(TransactionDefinition.PROPAGATION_NOT_SUPPORTED),

下面看下获取事务org.springframework.transaction.support.AbstractPlatformTransactionManager#getTransaction的源码:

public final TransactionStatus getTransaction(@Nullable TransactionDefinition definition)

throws TransactionException {

// 如果传进来是空的,使用默认的事务定义,默认是 PROPAGATION_REQUIRED 级别的

TransactionDefinition def = (definition != null ? definition : TransactionDefinition.withDefaults());

// 这里返回是 DataSourceTransactionObject 类

// 里面有个成员变量是 ConnectionHolder,该变量可以判断是否有事务激活了

Object transaction = doGetTransaction();

boolean debugEnabled = logger.isDebugEnabled();

// 判断是否已经有存在的事务

if (isExistingTransaction(transaction)) {

// 这里就是对嵌套事务的处理逻辑,判断事务的传播级别

return handleExistingTransaction(def, transaction, debugEnabled);

}

// 检查超时时间

if (def.getTimeout() < TransactionDefinition.TIMEOUT_DEFAULT) {

throw new InvalidTimeoutException("Invalid transaction timeout", def.getTimeout());

}

// 判断是否需要调用方法必须存在事务,如果是的话,直接抛出异常,因为如果外面有事务的话,不会走到这里了

if (def.getPropagationBehavior() == TransactionDefinition.PROPAGATION_MANDATORY) {

throw new IllegalTransactionStateException(

"No existing transaction found for transaction marked with propagation 'mandatory'");

}

// 这里就是常见的事务传播方式处理了,当没有嵌套事务时走这里

else if (def.getPropagationBehavior() == TransactionDefinition.PROPAGATION_REQUIRED ||

def.getPropagationBehavior() == TransactionDefinition.PROPAGATION_REQUIRES_NEW ||

def.getPropagationBehavior() == TransactionDefinition.PROPAGATION_NESTED) {

SuspendedResourcesHolder suspendedResources = suspend(null);

if (debugEnabled) {

logger.debug("Creating new transaction with name [" + def.getName() + "]: " + def);

}

try {

return startTransaction(def, transaction, debugEnabled, suspendedResources);

}

catch (RuntimeException | Error ex) {

resume(null, suspendedResources);

throw ex;

}

}

else {

// 设置的参数不在支持的范围,创建个空的事务。

if (def.getIsolationLevel() != TransactionDefinition.ISOLATION_DEFAULT && logger.isWarnEnabled()) {

logger.warn("Custom isolation level specified but no actual transaction initiated; " +

"isolation level will effectively be ignored: " + def);

}

boolean newSynchronization = (getTransactionSynchronization() == SYNCHRONIZATION_ALWAYS);

return prepareTransactionStatus(def, null, true, newSynchronization, debugEnabled, null);

}

}

进入handleExistingTransaction方法,就可以找到调用挂起的方法了。

if (definition.getPropagationBehavior() == TransactionDefinition.PROPAGATION_NOT_SUPPORTED) {

if (debugEnabled) {

logger.debug("Suspending current transaction");

}

// 挂起外层事务

// 挂起后,会把当前和线程绑定的资源解绑

// 返回的 SuspendedResourcesHolder 就是外层事务的具体信息

// 之后会和新创建的 TransactionStatus 的 suspendedResources 属性绑定

Object suspendedResources = suspend(transaction);

boolean newSynchronization = (getTransactionSynchronization() == SYNCHRONIZATION_ALWAYS);

// 第二个参数是 transcation,传了个null进去,这样返回的 TransactionStatus 的 actualTransactionActive 属性就会变成false,就是不支持事务了,而 actualTransactionActive 是一个 ThreadLocal 类型的变量,就是每个线程独有的。

return prepareTransactionStatus(

definition, null, false, newSynchronization, debugEnabled, suspendedResources);

}

suspend的代码就不列举出来了,上面代码注释中讲了个大概,最后挂起的事务在org.springframework.transaction.support.AbstractPlatformTransactionManager#processCommit方法的finally块中调用cleanupAfterCompletion方法进行处理。因为这篇文章不是具体讲这个的,所以细节也就不再赘述了。

问题总结

在此,导致服务假死的原因就理清楚了。在并发的情况下,同时8个线程获取到了连接,但是后面挂起了事务,再次取新连接时,导致阻塞排队了,造成原因有2点:

- 写配置对象时,完全不考虑参数的问题,随意覆盖,导致了写在配置文件的配置失效。

- 事务传播随意使用,不谨慎考虑使用场景。

这种问题埋下的坑,老实说是挺难排查的。有时候框架的使用带来了方便,但是也因为这种方便,很容易导致埋下一些奇奇怪怪的bug,增加排查难度。

所以,使用一个框架,要知其然知其所以然,多看源码解决问题,要不就一直都是API调用工程师。